HCI Overview

What is Hyper-converged Infrastructure (HCI)?

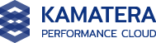

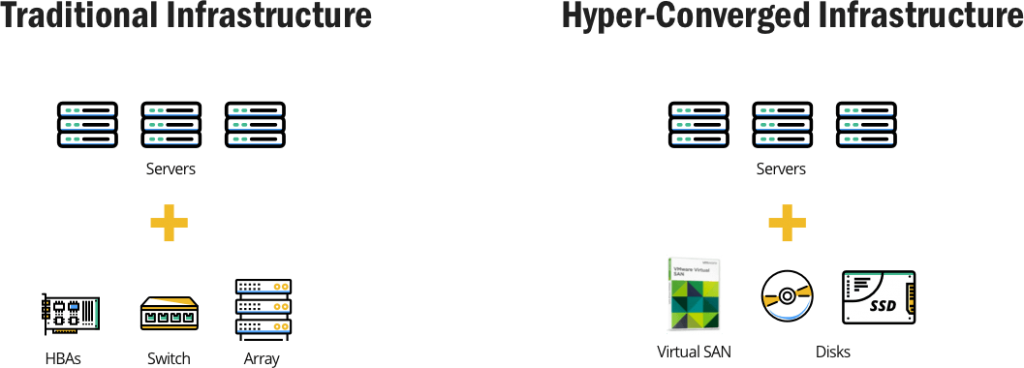

Hyper-converged infrastructure (HCI) is a technology that consolidates all functions of a data centre into an appliance that is easy to deploy, manage and scale out. It consolidates computing, networking and storage functions into a single resource pool. This increases infrastructure efficiency and performance while enabling an organization to easily deploy and manage its data centre without requiring high levels of expertise.

Basically, hyper-convergence reduces the complexities and incompatibility issues associated with traditional data centres. Derived from converged infrastructures where compute, storage and networking are consolidated, an HCI adds software control and is usually is referred to as a software-defined data centre. Instead of delivering server functions via hardware, a HCI does it through software.

Resources are purely software-defined and operations do not depend on proprietary hardware. For example, when storage is software-defined, it is not tied to a particular hardware such as SAN or NAS.

“It consolidates computing, networking and storage functions into a single resource pool. This increases infrastructure efficiency and performance while enabling an organization to easily deploy and manage its data center without requiring high levels of expertise.â€

Hyper-convergence technology offers the flexibility and economics of a cloud-based infrastructure without compromising availability, performance, and reliability. It allows organizations to simply add building blocks to a data centre instead of purchasing different components and dealing with multiple vendors each year.

HCI delivers a wide range of benefits to both vendors and organizations. Integrating the data center hardware and software functions creates a unified, responsive and ready-to-scale infrastructure. This reduces the complexities of adding resources and new technologies, hence lowering deployment and management time and costs.

Basics of hyper-converged infrastructure systems and how they differ from traditional IT systems

HCI is basically a software-defined data center which virtualizes all major components of a traditional system. This allows delivery of storage and networking functions virtually through software rather than using the physical hardware. A typical solution comprises of a distribution plane to deliver storage, networking and virtualization services, and a management plane for easy administration of resources.

“A HCI delivers lower operational costs, better performance and easier management than traditional infrastructures. It allows organizations to quickly deploy, reconfigure or scale the infrastructure to respond to changing business needs.“

What challenges in traditional data centers does hyper-convergence solve?

All aspects of a data center are combined within a single physical server to create a software managed resource pool. The platform uses intelligent software tools to combine the standard x86 server, networking, and storage resources, and create a single, easy to deploy, manage, and scale appliance. This eliminates the need for separate servers, storage arrays and networks as is the case with a legacy data center, hence addressing challenges such as:

- Reliance on expensive proprietary hardware

- Inefficient usage of resources

- Complex deployment and scaling processes

- Inability to quickly respond to changing business needs

- Scaling, incompatibility and performance issues

- Challenges in integrating and managing heterogeneous infrastructures

- Dealing with different vendors and third-party service providers

“Unlike traditional data centers which require different IT experts for system administration, storage, networking, and software, hyper-convergence consolidates all the resources and then adds an easy-to-use single point of management. It, therefore, does not require many staff or high levels of expertise.â€

Scaling in a traditional setup requires an organization to purchase and manually configure individual hardware products from different suppliers. Besides incompatibility issues, this is time-consuming and often require complex configurations.

On the other hand, scaling in HCI is quicker and easier. Although there is still need to add physical nodes, the management software configures the additional resources automatically. Incompatibility issues are rare since the hyper-convergence vendors will have pre-tested and verified the hardware before building an appliance.

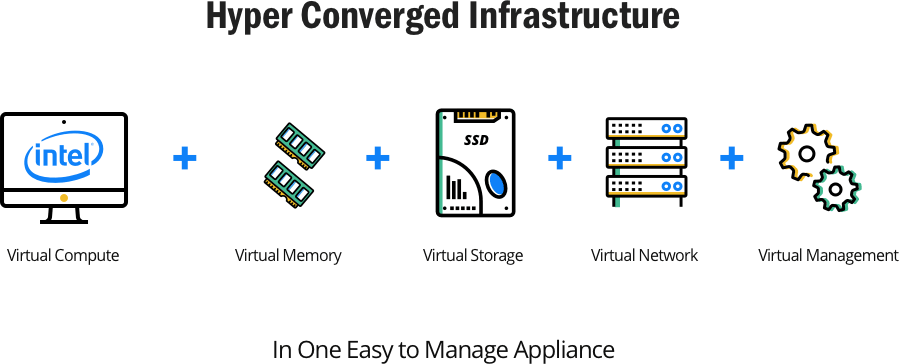

Differences between Hyper-convergence and convergence

Converged and hyper converged infrastructures have similarities as well as differences. Each of the technologies employs a different operational model.

- While a converged infrastructure is more hardware focused, a hyper converged system is software driven.

- Resources in both systems are grouped together and presented as a single product. However, each follows a different expansion model. A convergent infrastructure (CI) uses scale-up approach to add individual consumable resources such as storage, memory, and CPU.

- A hyper convergent system employs a scale-out expansion model in which customers increase capacity by simply adding extra nodes comprising of all the main resources. As such, a HCI adds all the data centre resources at once, but not individually – something which can be an advantage or disadvantage.

- A converged infrastructure, unlike a HCI, can be broken down and individual building blocks such as servers or storage used independently.

- While storage in a CI is physically attached to the server, a HCI has a storage controller function that runs, on each node in the cluster, as a service. This improves the resilience as well as scalability, such that, in case of a failure of storage in one node, the system will just point to another node with very little interruption.

- A hype converged system is a single appliance from a single vendor who provides a unified support for all components. However, various components in a converged architecture may come from different vendors, and this may be a challenge when addressing issues or updating specific components.

- Usually, customers request vendors to configure the CI product to run a specific workload. Such a solution will only support a specific application like a database or a VDI. However, it comes with very little flexibility to alter the configuration and run other applications. A HCI is more flexible and has the ability to handle a wide range of applications and workloads.

Main components of a hyper-convergence infrastructure

Hyper-convergence consolidates all major server functions into a single platform using an x86 server. This puts together the server and corresponding hypervisors, storage and networking. Other features include data protection, replication, backup, public cloud gateways, and more. Instead of using different hardware and complex configurations to provide these services, a HCI, provides them using a software-defined data centre approach.

A typical HCI node comprises of a distributed file system for organizing and managing data, a Hypervisor, and an optional network. The main hardware and software components include;

- An x86 hardware

- Network switches

- Storage devices

- HCI software – Hypervisor or virtualization software and other intelligent tools

Ideally, rather than have servers, network equipment, and storage arrays on their own, the HCI integrates all these in a single box. This may vary with some configurations where storage is an external box or cloud-based. However, even in such a case, all the storage from different devices is brought into a shared pool.

Although the technologies inside the appliance may differ according to the vendor, a typical node will have software-defined storage, automated workload placement and data tiering as well as high availability and non-disruptive expansion.

Network switches

These are used for scaling and integrating an HCI to other infrastructures such as cloud and local area network. They enable data transfer between nodes and other building blocks. However, communication between virtual machines within nodes uses software-defined networking.

Server virtualization software

This virtualizes a server’s computing capacity, storage, networking, and security, hence, enabling admins to create various standard virtual computers using the same hardware configurations. Typical virtualization technologies include aSAN for storage, aSV for computing, and aNet –networking. However, there is more comprehensive HCI software that offers virtualization, data protection services, backup, disaster recovery, and other functions.

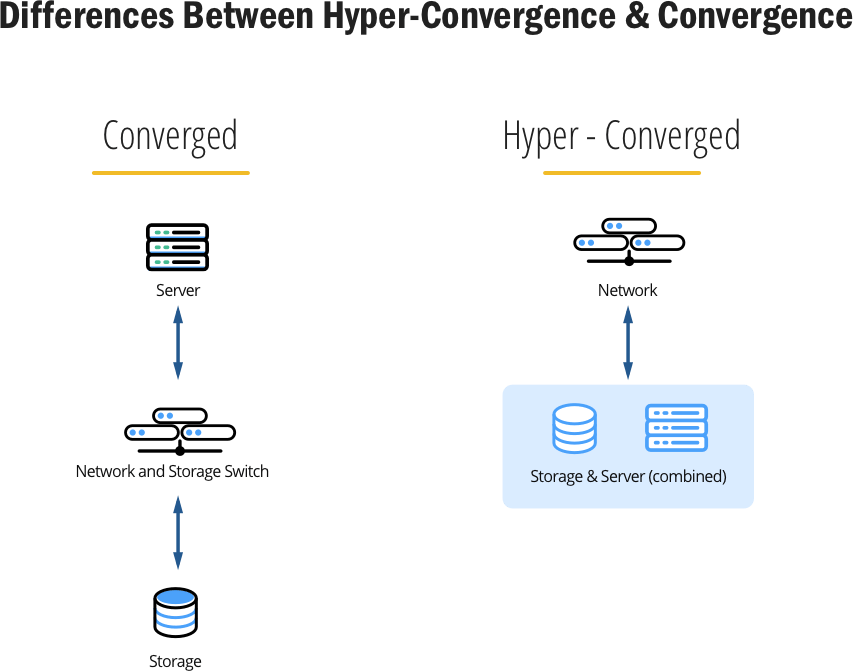

Hypervisor and its role in HCI

The Hypervisor is a software that runs between the physical server and host operating system. It plays a major role in a HCI where it provides a hardware abstraction layer, workload management, adjacency, and containerization. Common functions include the management of the compute, storage and networking resources as well as the virtual machines running in the system.

A typical hypervisor comprises of software tools to manage the platform and allow multiple operating systems and applications to share physical hardware resources. It creates and runs the virtual machines while coordinating the access to all the hardware resources.

Upon server start-up, a hypervisor assigns resources such as CPU, memory, storage, and networking to each virtual machine based on allocations. It then allows multiple virtual machines to run independently while sharing the same physical resources. In addition, it enables hot migrations without interrupting operations or tasks.

The hypervisor controls how data moves across different machines through the network. Generally, data is transferred through dedicate switches between different externally connected machines. However, the hypervisor has a software-based networking that creates virtual connections and allows data transfer between virtual machines within a node.

Hypervisors are usually available as standalone products or simply as a feature that comes as part of a larger HCI suite. If a feature, the customer has little choice since it is usually integrated into the appliance. Vendors may use their own custom hypervisors or modify existing types to work with their appliances.

Most HCIs support a number of popular Hypervisors such as the VMware, Microsoft Hyper-V as well as kernel-based Virtual Machine (KVM). Some HCI providers such as Nutanix have its own open source KVM hypervisor, but also support others such as VMware.

Types of Hypervisors and how to select a suitable one

Due to the role virtualization plays in HCI, the choice of a Hypervisor is critical. This should closely match requirements and ensures better availability, performance, and reliability of the infrastructure. There are different types of hypervisors. Most of these fall under the following two categories.

Off the shelf Hypervisor

Typical products include premium software such as Microsoft Hyper-V, VMware ESXi, as well as open source systems such as Kernel-based Virtual Machine. Most often, small organizations without developers will settle for these types of hypervisors.

Although off-the-shelf hypervisors are generally flexible, organizations with IT developers can fine-tune further to suit their requirements.

Custom built hypervisor

Some vendors or organizations may build their own hypervisors and customize them for specific hardware and applications. Vendors will often supply device-specific hypervisors as features of a hyper convergent package. This is usually easier to use and less expensive than an off-the-shelf solution. In addition, customizing for specific HCI components eliminates redundant features that an OTS hypervisor may have for other products.

Choosing a hypervisor

In most cases, choice of the Hypervisor depends on the HCI product a customer purchases. A customer should first analyze what a vendor is offering to determine if it will address current and future business needs. Ideally, a HCI vendor must also be in a position to respond and adjust their services to keep pace with changing business requirements.

Factors that influence the choice of a hypervisor include its capabilities, ease of use, management scalability, compatibility reliability, availability, and cost. Also important is its performance, reliability, availability, and ability to integrate into an organization’s virtual infrastructure.

“Due to the role virtualization plays in HCI, the choice of a Hypervisor is critical. This should closely match requirements and ensures better availability, performance, and reliability of the infrastructure.â€

Using Hyper-convergence Infrastructure

Benefits of hyper-convergence

HCI extends the capabilities of a data centre beyond the limitations of traditional systems. Integrating all server functions together simplifies deployment, management, sizing, and scaling as well as distributing and migrating workloads.

Major benefits of hyper-convergence are:

Operational efficiency

A simplified management empowers IT staff to do more and they do not have to be highly qualified. Some of the benefits include easier scaling in or out, less complex integration and maintenance processes. It also eliminates the need to have different IT departments, and instead, just have one team manage everything.

Improved business agility

Policy-based management in HCI enables quick deployments of applications in addition to customizing the infrastructure to suit specific workloads. HIC also provides a flexible platform capable of responding to existing and future IT needs. In particular, it is easier to adjust recourses to meet dynamic workloads in the present day business environments.

Easy scaling

Scaling is easy as it only requires adding extra nodes depending on business needs. In addition, a business can remove nodes when the demand for resources reduces.

Improved data protection and disaster recovery

HCI simplifies storage, data backup, and disaster recovery processes. Unlike traditional systems, data backup and disaster recovery are inbuilt into the HCI. As such, these processes, which are also automated, become much simpler and more efficient. In particular, disaster recovery is usually very fast, and almost instant. Each node contributes to a reliable and redundant pool of shared storage such that even if one node fails, the workload is shifted to a good node with little or no interruption to operations.

Simplifies hybrid cloud deployment

HCI supports hybrid cloud environments in which an organization keeps sensitive data and applications on-premise, and at the same time have some workloads and data in public cloud. This feature enables seamless and efficient moving of virtual machines between public clouds and on-premises data centers.

Faster deployments

A HCI is usually a single, fully- integrated solution that takes about half an hour to setup and 5 minutes to add another node. This reduces installation time and other challenges associated with setting up a traditional server system comprising of different components.

Reducing data centre footprint

Consolidating all the components of a data center into a single, compact appliance enables organizations to significantly reduce space, cabling and power requirements.

Low cost

HCI has the potential to lower both capex and operational expenses. This arises from combining inexpensive commodity hardware with a simple management platform

The technology uses commodity server economics and does not rely on expensive proprietary hardware. Most often HCI appliances use the latest hardware which is utilized efficiently. As such, there is less overprovisioning as happens in traditional systems.

Disadvantages of hyper-convergence

Although hyper-convergence allows organizations to efficiently utilize their resources at lower costs, its customers may face some challenges. Organizations deploying HCI must deal with unexpected licensing costs, scalability and other limitations. These issues are not evident in the initial stages, but may crop up eventually if the IT team fails to pay attention to details during the planning and implementation phase.

Scalability issues

HCI is flexible and easy to upgrade as workload or demand for more resources increases. Usually, it automatically detects and allocates new resources evenly across the workloads.

However, the system is usually designed to distribute a combination of resources. Sold as a package, most HCI solutions do not have the option to upgrade individual components. This makes it hard to just add a resource such as the CPU power without adding others such as storage, bandwidth, and memory. A client must add all resources, including those not required. This adds unnecessary expenses, energy and space requirements.

To reduce wasting resources or spending on capacity that will never be used, there are now newer systems that offer compute- or storage-centric nodes. This enables customers to only add what they require.

High power consumption

Power requirements for HCI systems are usually higher than those of traditional rack-based systems. This is due to the high density of equipment in the systems. When designing a data room for a HCI, it is critical to ensure that power system will meet both current and future requirements.

Risks of vendor lock-in

Customers should avoid highly proprietary HCI systems with the potential to lock them to particular hardware or platforms. This prevents incompatibility issues when deploying hybrid cloud computing or integrating with other HCI products. Generally, a hybrid cloud requires the different systems to work and move seamless across boundaries. Each organization should aim at acquiring a highly compatible hyper-converged platform that can work with other critical technologies.

Additional infrastructure costs

Organizations must address other challenges arising from different hardware combinations. This includes upgrading network equipment such as switches to meet unique requirements for HCI.

When to use hyper-convergence or convergence

The choice between a converged and hyper-converged infrastructure depends on the application and circumstances.

CI and HCI are designed for different applications and circumstances. Generally, convergent infrastructure is usually tailored for certain applications in large enterprises, while HCI targets small and mid range organizations that do not require high levels of customizations.

To make the right choice, each organization must first access its workloads and level of performance, agility, and scalability it requires. Although some businesses can deploy just one, those with a wide range of requirements can have a mix of the two infrastructures.

There is also a big difference in how these two options work with the cloud. For instance, converged infrastructure is ideal for building a private cloud in a highly virtualized data centers. This allows them to enjoy the benefits of a cloud system without exposing their applications and data to the public.

In addition, a CI is a perfect choice for Platform 2.0 hardware-intensive applications such as;

- Customer Relationship Management (CRM)

- Enterprise Resource Planning (ERP),

- Enterprise messaging

- SAP workloads

- Database grids

“Generally, convergent infrastructure is usually tailored for certain applications in large enterprises, while HCI targets small and mid range organizations that do not require high levels of customizations.â€

HCI, on the other hand, is suitable for applications that require agility, easy and quick and low cost scaling up or down of resources. It works best for Platform 3.0 applications that require cloud-based environments such as

- Life cycle and cloud applications

- Big Data analytics

- Application development environment

HCI provides a good platform for public and hybrid cloud deployment, especially due to the ease of integrating with hypervisors running on public clouds. This allows the organizations to manage the automation of functions between the cloud and the in-house data center.

HCI is ideal for organizations looking to reduce complex installations and maintenance procedures. In addition, it is an appealing solution to organizations looking for a solution that allows them to quickly change their systems to meet dynamic business environments and requirements.

Converged systems are best suited to run specific tasks and may not work properly mixed workloads such as running an e-commerce application alongside a virtual desktop infrastructure. There is also the risk of vendor lockout or inability to add individual resources such as memory or storage in over-engineered systems.

VDI in hyper convergent infrastructure

Hype convergence simplifies the process of planning and a deploying virtual desktop infrastructure in organizations. One of the main benefits is eliminating the up-front design and integration required when deploying large VDI systems. In addition, it allows organizations to quickly and easily scale as workload increase.

As hyper convergent infrastructures become more common, organizations are moving their virtual desktops as well as remote and branch offices away from traditional storage systems.

Implementing a traditional infrastructure that supports VDI is usually a complex process. But this is easier in a HCI due to virtualization and ease of adding or removing nodes. In addition to reducing the complexity of deploying VDI, hyper-convergence will also boost performance and ease the management of the entire system.

Organizations using virtual desktops on HCI will, however, need to reorganize their IT departments and have VDI admins to perform broader roles. For instance, they must start managing the entire hyper-converged infrastructure instead of just specializing on virtual desktops, which is only a part of the infrastructure.

In legacy systems, an IT shop or organization have several IT departments handling different aspects of a data center. This includes separate teams to support storage, another for networking and a third group of servers. But HCI requires a simpler support and does not require different specialists or high expertise. This means that a VDI expert can as well handle other tasks and support the entire server.

Storage in HCI

A HCI is usually a commodity server with a highly optimized storage, either inside the physical machine or externally connected via a high-speed bus. A Software-defined storage (SDS) stack then glues all these components together, to provide an efficient and easy to manage single logical storage. This enables automation of storage, and integration with other server functions such as compute and networking, all of which are then placed under one management layer.

SDS is a policy-based storage with benefits such as simpler capacity planning, better handling of transient workloads, optimized performance and more. Usually, HCI appliances have their own data store pool and distributed file systems. Others have specific hardware to accelerate data deduplication and compression processes, hence increasing the speed and efficiency, while lowering costs.

An SDS provides value-add functions such as disk to disk mirroring, de-duplication, compression, incremental snapshots, thin provisioning and others that expensive traditional models such as a SAN array or shared storage offers. The integrated storage support for data de-duplication, as well as snapshots, allows copying of data within a cluster or between different locations.

Benefits of storage in HCI include

- Better performance and reduced TCO

- The ability for organizations to implement more flexible infrastructure

- Reduce cost, complexity, hence allowing even small and medium size organizations to implement the technology.

- SDS consolidates all the storage devices to create less costly and easy to manage logical storage pool, not specifically tied to expensive proprietary hardware or software.

Majority of HCI appliances uses a mix of spinning disks and solid-state storage in each node to give it the ability to efficiently handle sequential and random workloads. The fast solid-state storage allows HCI appliances to support intensive workloads, including multiple VDI boots and logins.

Hyper-convergence data protection, disaster recovery strategies, and tools

In a HCI, workloads, applications, and data are intelligently spread across different nodes and, therefore, several physical disks. This improves resilience in case of a fault in one of the hardware resources. In the event of a failure in a storage device or a node, the HCI software automatically shifts the workload to a working secondary appliance. Deduplication allows fast recovery by easily moving workloads to another location or node without moving a lot of data.

By default, a HCI system automatically replicates data across multiple nodes, hence improving fault tolerance. This makes it easy to replace a failed node without a downtime. Some organizations may install and replicate clusters in multiple locations to avoid a downtime in case of a disaster.

“In a HCI, workloads, applications, and data are intelligently spread across different nodes and, therefore, several physical disks. This improves resilience in case of a fault in one of the hardware resources.â€

Disaster recovery features in a certain HCI solution may vary according to the hypervisor and services a vendor provides. Below are some data protection and DR features from popular vendors:

- Nutanix: data backup, replication, depuplication and DR

- Pivot3: virtual replication, disaster recovery

- Hewlett Packard Enterprise (HPE) SimpliVity: Backup and disaster recovery

- Cohesity’s : backup, copy data, archiving, recovery

- Rubrik Alta : backup and recovery

- Cisco HyperFlex: Replicating virtual machine images, and disaster recovery

- VMware Virtual SAN: stretched clusters usually between different geographic locations,

Other than offering optimized performance, storage in hyper-convergent appliance provides good data protection by working as a data backup and disaster recovery medium. One of the greatest benefits of this arrangement is the HCI’s s ability to perform instant recovery in case of a crash or a disaster.

Disaster Recovery Strategies

HCI borrows some of its data protection and recovery processes from legacy systems. The common strategies include

Multi-Nodal Storage

HCIs provide multi-nodal storage in which an appliance initially comprises two or three storage nodes. In a three-node appliance, one of them oversees data mirroring across the other two while approving synchronization of the mirrored data and volumes. However, a two node system does not use a dedicated disk to oversee the operations. A three node system may be more costly because, in addition to the extra hardware, most vendors tend to issue a separate software license for each of the three nodes.

High Availability approach

High availability architecture addresses the possibility of a node controller failure by clustering a primary server with a mirrored server. Operating these in an active-active cluster, where each server handles an identical workload, ensures continuity and little or no interruption in the event of a server failure.

Another option is an active-passive mode, where one node remains offline. However, it still mirrors data, and if need be, become active when there is a requirement to support the workload. If the primary server fails, the secondary activate automatically and takes over the workload. This shift is usually smooth, but may experience some data loss.

Cloud seeding

Usually employed in big data applications and involves copying bits from the data farm to a separate storage node positioned behind an SDS controller. This could be a tape library or other media. Since this operation uses a linear tape file system that does not use server processor, it has little effect on performance. Tape backups are portable and can be restored into any compatible infrastructure in case of a capital D disaster or any other that destroys an entire data center.

Cloud disaster recovery

Most HCI appliances include options to use the cloud as backup or replication targets, while others may allow customers to fully deploy applications there.

Some vendors integrate a disaster recovery capability into the HCI. For example, a customer can configure an online recovery site in a remote office via an active-active deployment. Similarly, the HCI may have active-passive disaster recovery model where a recovery site is a remote data center, a public cloud or in a third party host.

Conclusion

Current trends and future of HCI

HCI provides a faster, ready-on-demand solution with shorter planning cycles and suitable for cloud-native and traditional applications. It provides a platform that quickly responds to changing business needs and is likely to drive long-lasting changes in the server, networking and storage architectures as well as in the way IT teams operate. The possible impact of HCI deployments includes;

Consolidating IT teams

Data center deployments will move from traditional three-tier silos to flexible and easy to manage hyper converged architectures. By consolidating the management of compute and storage, HCI simplifies the management while providing an opportunity for organizations to merge different teams. This will help them cut costs, improve responsiveness and performance.

Outpacing traditional storage solutions

HCI has shorter and faster development cycles for both hardware and software than proprietary storage systems such as SAN and NAS. Since storage is built into the infrastructure, businesses do not need to purchase storage from other third-party vendors, neither do they need to set up and maintain other separate storage hardware. This is likely to see a shrinking market for traditional storage products such as SAN.

Rack servers to come back

HCI has the potential to reverse the blade server market that has been declining. The ability to add and share storage on a commodity server allows organizations to build high-volume and scalable servers at low costs. Since the new servers provide virtual resources for in-house users, organizations will invest more in server platforms with higher storage capacities to suit a wide range of application needs.

Change in Vendors business models

With time most vendors will stop selling separate services such as storage, and instead consolidate them into a single platform that offers a complete virtualized infrastructure. Majority of vendors providing server, storage, and virtualization technologies separately will be better placed to integrate these and produce cost-effective HCI appliances.

Summary

Majority of today’s data centers are evolving from hardware-based to software-defined hyper-converged infrastructures. This enables businesses and organizations to focus on applications, as the software schedules respective resources according to requirements.

Benefits of hyper-convergence include the allocation and re-allocation of resources such as computing capacity, storage, and networking without compromising or changing the static hardware components. This brings along flexibility, easy deployment, simple management, scaling and efficient utilization of resources and low operational costs.

Because of its ability to meet a meet a wide range of IT needs, it is ideal for all organizations seeking cost reductions, agility and performance improvements. Scalability allows even the small organizations, to start with a minimum number of nodes and gradually add more as their business needs grow.

Assessing the current infrastructure and where the organization wants to be in about three years is important. This should look at how the organization is using compute, storage and networking both on-premise and in the cloud, as well as how they are managing application development and other functions. This will help identify any gaps and see if a HCI solution will improve service delivery at lower costs.

HCI is suitable for virtualized workloads, data analytics, as well as for mission-critical and business applications. It can be used for in-house clouds, virtual desktop infrastructures, remote and branch offices, storage and data protection.

With the technology maturing, hyper-convergence will handle even the extreme enterprise workloads. Typical end-users include financial institutions, manufacturing, IT service providers, government departments, healthcare and more.

However, hyper-convergence does not work for all applications and especially those requiring low latency or with high I/O rates.

Before deploying a hyper-convergence solution, each organization must ensure the product they choose will provide the required level of performance while adding flexibility, scalability, manageability and cost reduction.